Kustomize your way to MongoDB ReplicaSet

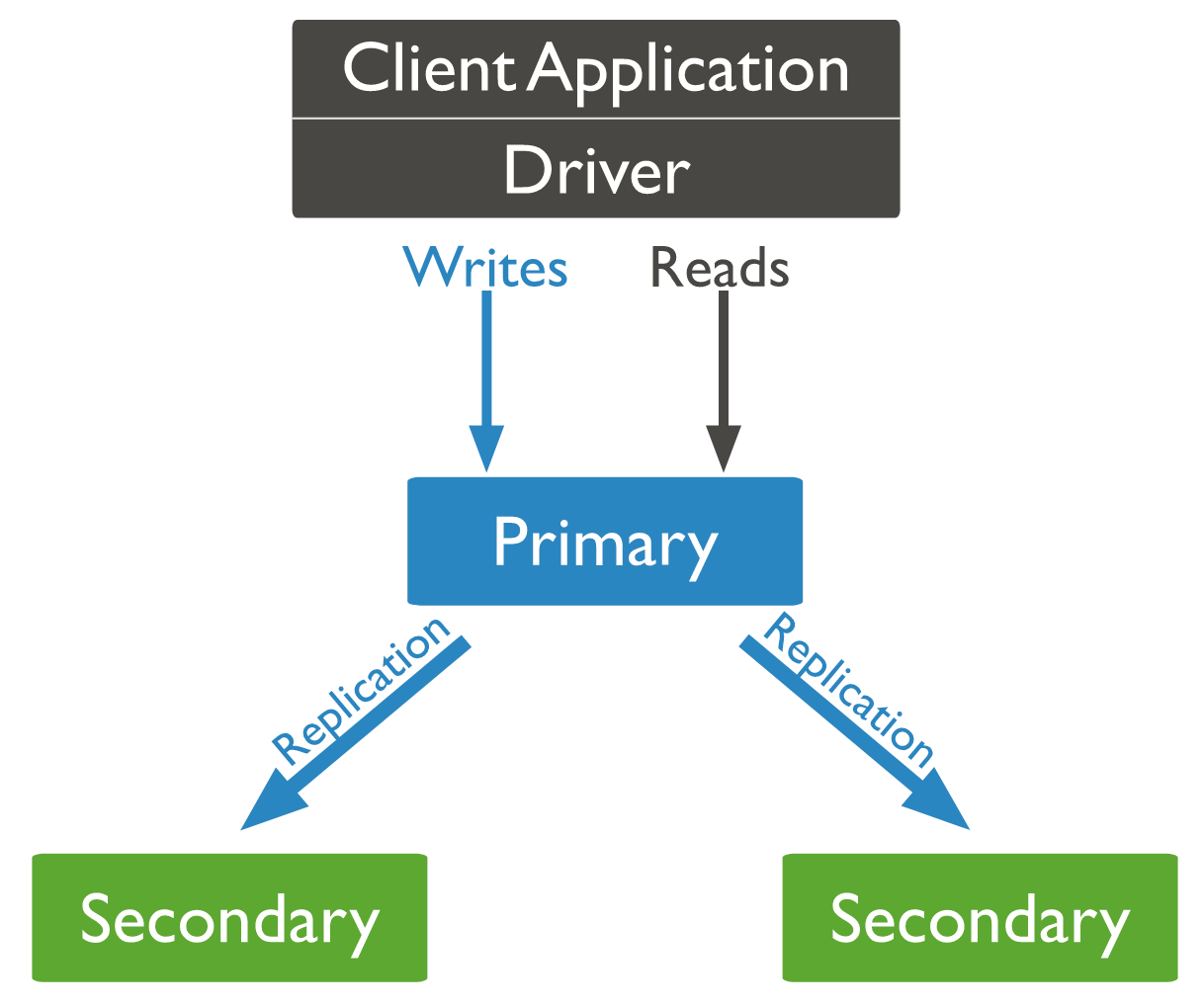

A replica set in MongoDB is a group of mongod processes that maintain the same data set. Replica sets provide redundancy and high availability and are the basis for production deployments.

In this post we will set up a MongoDB replica set with the abilities to be a production-ready environment. We are going to use a Kubernetes cluster, using kustomize to set up the whole system.

There are some capabilities that a Kubernetes system provides us; the biggest one is to maintain HA. If any pod/statefulset goes down Kubernetes will try to bring it up again, maintaining the number of replicas in the system that are configured by the user. Hence, while setting up the MongoDB replica set we also want to use the capabilities of Kubernetes to maintain high availability.

We want a capability to autoscale MongoDB ReplicaSet with the number of replicas of MongoDB StatefulSet. In order to do so we will set up a sidecar that will keep on monitoring the pod (replicas). If any new MongoDB StatefulSet’s replica comes up the sidecar will add it to the MongoDB ReplicaSet, and if the MongoDB StatefulSet replica scales down it will remove it from the existing MongoDB ReplicaSet. The code for sidecar you can check out on GitHub.

In our example we want our MongoDB to be set up with security features, so we will run MongoDB in a secure mode. We will make the communication between the mongo nodes secure with a mongo key; additionally we will start mongo with the “auth” option and will configure an “admin” user. We will run some scripts to set up the system according to our application needs and those scripts run only one time in a lifespan of MongoDB (only at start), with the help of docker-entrypoint.sh provided by MongoDB's docker image. We will load all those scripts into the filesystem with the help of ConfigMaps. We will supply passwords via K8s Secrets. Moreover, we don’t want to maintain a local copy of the image. Instead, we will be using the mongo image from hub.docker.com so that we can easily update mongo to the latest version.

Requirements

- A K8s cluster.

- Kustomize Installed.

- Internet Accessibility.

We will be using the docker image provided by MongoDB and a sidecar image built from mongodb-k8s-sidecar.

Let's get started

I am using a GKE cluster but you can run the same on your local Kubernetes cluster too.

The MongoDB docker image has the ability to configure some scripts to run only when the first time MongoDB is started. We will take benefit of this feature and will set a root user password and will create one more user just to demo that we can design the system in such a way that all those scripts run only once in their life span.

Then we will make communication between the nodes of the replica set secure with a mongo key. This too we will demo in a way where you don't need anything except a YAML file.

Create Scripts and Secrets

First, let's create a script that we want to run the first time. We will load the scripts using configMap.

All these env variables we will fill with the help of mongo secrets:

“mongoRootPassword” will be the password for the admin user named “root” and “unatnahsDbPassword” will be the password for the “unatnahs” user mentioned in the mongo-user.sh script in configMap.

Now we will create a mongo-key which will help to secure inter-node communication:

Change Script Permissions

When the Mongo runs we need to set specific permissions for mongo-key. Since we will be loading everything into the filesystem of the mongo container with the help of ConfigMap we may face an issue for the permissions as the mongo pod will start with the “mongo” user, not the “root” user. We first have to change permissions of the scripts on the fly and then start Mongo. To do that we will temporarily load the configMap of mongo-key into a temp location and then we will copy to a more obvious location. The reason for doing this is that the Container loads configMap as a symlink in the filesystem and that will not allow us to change the user and permission of the script. So first we will copy the file to another location and then we will change the user and the permission of the file. To do so, we have another script.

Create K8s Service

Now we will create a Service which should not load balance.

Create Service Accounts

Now we will create a service account and cluster role binding. Our sidecar needs permission to watch the pod. You can control the permission. We need just a watch and list permission but the following gives a lot more permissions on the system.

MongoDB StatefulSet

Now let's just go to the final state and create a StatefulSet with two containers - one of the actual mongo and the other one as the sidecar.

In this StatefulSet we have loaded all the ConfigMap as required on specific locations. The StatefulSet first will run the permission change script, then the actual mongo script with required arguments.

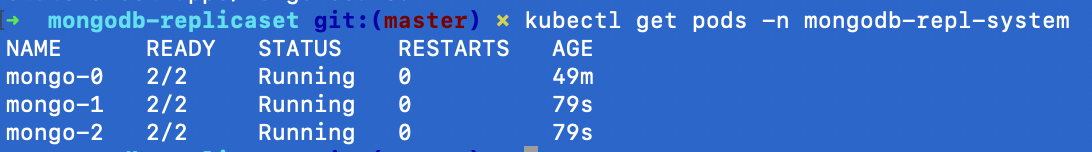

VOILA!!!

We have set up a complete mongo replica set! Now you can just increase or decrease the ReplicaSet count with a simple:

Now you can connect to the mongo with the user mentioned above on:

Port forward to access mongo on local:

Conclusion

We set up a secure MongoDB replica set on the K8s cluster using kustomize with the help of mongo’s own docker image.

PS: You can check out the code on GitHub. There either you can use a single manifest file or the properly segregated files for better readability.