An architecture built for Kubernetes at scale

Palette’s unique decentralized architecture is an unbeatable foundation for Kubernetes cluster performance, scalability and resiliency.

Enterprise Kubernetes needs a scalable architecture

In a 2023 survey of production Kubernetes users, “scalability and performance” was second only to “security” as the most important requirement for a Kubernetes management platform.

It’s easy to see why. 56% of enterprises have more than ten Kubernetes clusters, and 80% expect that number to grow in the next year.

When you have multiple clusters, in multiple environments, with many applications and many developers that need access, you need:

- Performance across the infrastructure, with no slowdowns to the management interface as the environment scales, nor delays executing changes in larger environments.

- Consistency, with policy enforced predictably across clusters wherever they are, so there are no snowflakes — and automation to make that consistency achievable.

- Resiliency, with clusters continuing to perform normally even if connectivity is interrupted.

We engineered Palette with a unique, patented, decentralized architecture to deliver exactly the scalable, resilient performance you’re looking for.

What it means for you

Governance

The central management plane is the ‘single source of truth’ for desired state, policy and access controls. There’s no room for human error duplicating management instructions on a cluster-by-cluster basis, or between multiple ‘management servers’, and no wasted time.

Efficiency

Only minimal instructions and reporting pass between the management plane and the cluster, meaning CPU and network overhead is kept to the minimum.

Scalability

Because the management platform doesn’t have to perform all the ‘heavy lifting’ of the control plane, it can easily scale to meet the demand of all your workload clusters as you grow — even to hundreds or thousands of clusters.

Parallelism

When you request a change that affects multiple clusters, for example an urgent patch, Palette can instruct each control plane to make it happen, in parallel, for much faster outcomes. The management plane never becomes a bottleneck.

Autonomous clusters

If the management platform were to experience an outage, each workload cluster has its own control plane, meaning it retains its resiliency capabilities. Self-healing, auto-recovery, launching of new nodes on failures, auto-scaling, and other policies still work!

Support for diverse connectivity models

Workload clusters can continue to operate based on “last known policy” whether they can reach the management plane or not — so Palette is ideal for use cases where network connectivity is intermittent or even disconnected by design, such as in air gapped deployments.

How it works

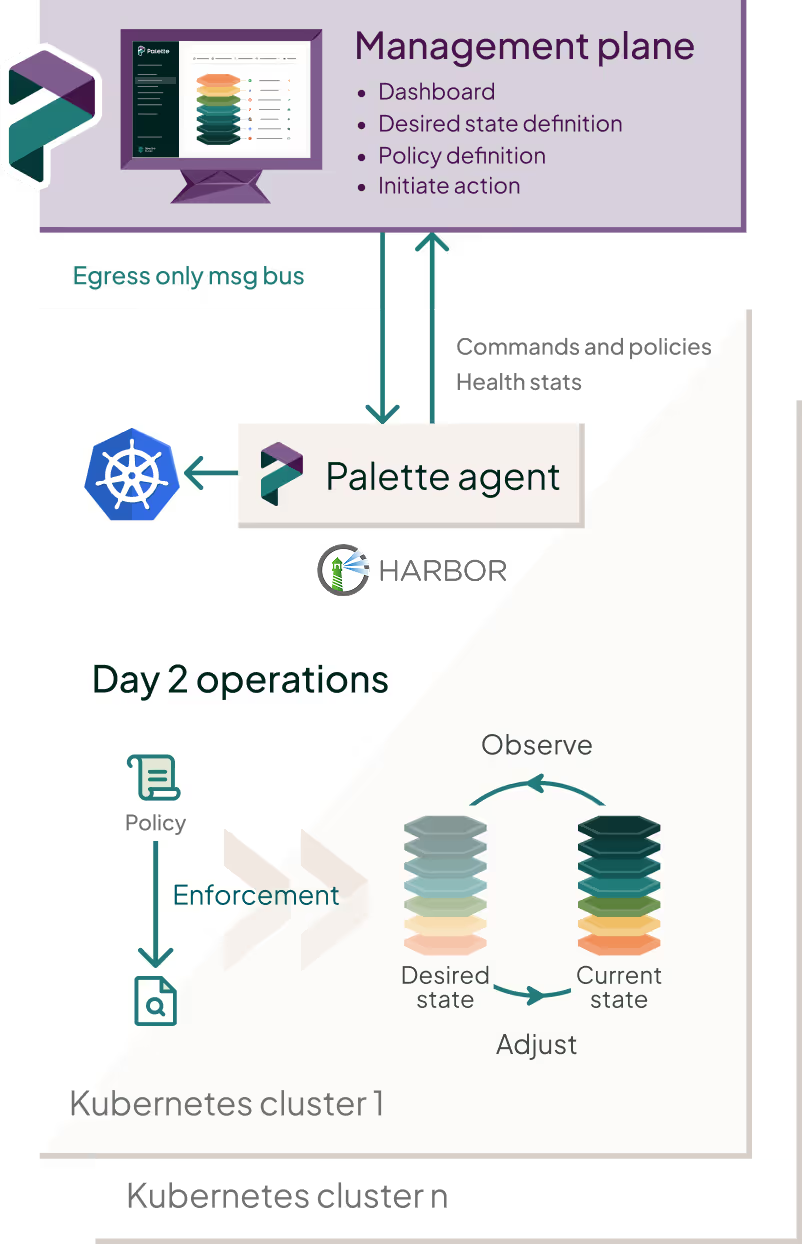

Users interact with the Palette management plane, which may be hosted in our SaaS instance, or self-hosted by you.

The management plane provides cluster health insights and enables users to define and manage policies and ‘desired state’ for each cluster, principally through building Cluster Profiles.

Changes to clusters can be driven through the Palette UI, CLI, or API, for example through our Terraform and Crossplane providers.

Policies, definitions of desired state, and action commands are pulled down to the control plane by each individual Palette Agent. deployed as part of the control plane. This agent stores policy locally and enforces it, giving the cluster auto-reconciliation and self-healing capabilities.

The agent periodically sends health status events to the management plane, and receives notifications about changes in policy and other pending actions; otherwise, network traffic is minimal.

What happens in the event of an outage or network interruption? Each local cluster agent continues with business as usual until the connection with the management plane is restored, when it fetches all of the changes made during the interruption.

The exact same process applies no matter how many clusters you are running, and whether they are running in a public cloud, virtualized or bare metal data center, or at the edge, and regardless of what Kubernetes distribution or software stack you’re running on each cluster.

Learn more about decentralized architecture

Take your next step

Unleash the full potential of Kubernetes at scale with Palette.

Book a 1:1 demo with one of our experts today.